SUBJUNCTIVE:a grammatical mood expressing doubt, desire, hypothetical situations, or, uncertainty

INDICATIVE: a grammatical mood used to state facts, express opinions as realities, or ask questions

As any system evolves in time it moves at each instant from all the things that can happen next to the thing that does happen next. Much of the macro-physical world does so in a deterministic manner: if you know certain parameters you can predict what’s next. Interacting humans, by contrast, seem to stumble from one moment of freedom to the next somehow grasping each moment the next action that becomes factual history.

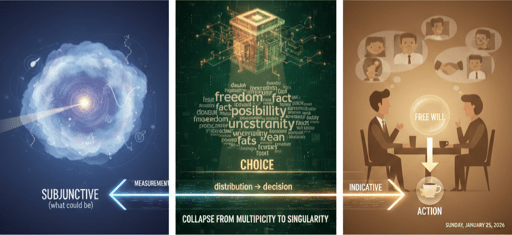

The human case is an example of a general problem: how do systems move from multiplicity (superposition, distribution, imagined possibilities) to singularity (definite outcome, token, action)? How do you get from subjunctive (what could be) to indicative (what is and then was)?

Let’s play with three exemplars: measurement in quantum mechanics selecting from the multiplicity of states an atom is in; the final stage of a transformer large language model when from a distribution over the vocabulary a next word is chosen; everyday social interaction in which various cognitive processes are going on and then the agent chooses a response action from among whatever could be imagined as possible “next moves.”

Clearly these three processes are not the same. All three do involve some kind of “collapse” from a space of possibilities to a single outcome, but the analogy is strained. The analogy first struggles with the nature of the indeterminancy. In the quantum case the unmeasured system evolves according to the Schrödinger equation. The wavefunction spreads out in true superposition, not just “we don’t know which state it’s in” but actually “in” all possible states. For the LLM we have a probability distribution over all possible tokens. In the human case there is some cognition of “all of my possibilities.”

A second problem is that the “selection mechanism” is not simply analogous. In physical systems, when we measure, we get a definite outcome with probabilities given quantum mechanical calcutions. Physicists don’t really agree on how that happens. Does measurement cause genuine collapse of superposition or do we experience just one branch of a multiverse of outcomes or is the randomness only epistemic? In the LLM case, the last step of the transformer is that we generate a pseudorandom number (which, given a seed, is actually deterministic) and use it to select from the discretized probability distribution. In the human case some sort of more or less free will choice generates the next action. We experience this as having a deliberative and evaluative dimension that seems to not export all the non-randomness upstream.

The analogy also breaks down in terms of who or what does the selecting. In the quantum case, physical interaction with the environment does the selecting. The system doesn’t “choose,” measurement is imposed from outside. In an LLM it is a programmed procedure. Someone built the system to use softmax with temperature T and that’s just what happens. For humans the selection is phenomenologically experienced as choice – there’s a moment where “I could do X or Y” becomes “I did X.” Whether this is metaphysically free or causally determined, it feels different from having a measurement imposed or following a programmed sampling rule and we usually treat it differently when assessing the moral rightness or wrongness of actions.

This meditation leads to more questions than answers.

Does this framing highlight something important for alignment? Do different mechanisms for collapsing possibility space have different implications for accountability and learning. What kind of “selection mechanism” do we want AI systems to have? Is pure softmax sampling satisfactory? Or should we require something that models deliberation that can be revealed as explanation or justification? Does the selection mechanism relate to whether an agent can genuinely “take roles” or respond to norms rather than just pattern-match?

Do norms act only upstream, biasing what possible next actions get what probability before an arbitrary or motivated choice is made? Or do norms guide the selection? Do humans have a moment of pure probabilistic selection? Do these three possibilities: LLM sampling (mechanical probability experiment), human choice (which at least phenomenologically involves deliberation), and quantum measurement (something we genuinely don’t understand) exhaust the space of ways that multiplicity can collapse to singularity? Are there biological/evolutionary selection processes that work differently? Economic market selection?

When an LLM refuses a request, can that be norm following? Or would that require something more – deliberation, evaluation – in the mechanics of the model’s operation?