When we talk with one another we are simultaneously aware that we are in the world together and that we have to work very hard to communicate our inner worlds well enough for it to feel like we are in the world together.

When we stop and think about it, we may see that our inner meanings – what we feel, think, and want to say – are only ever imperfectly reflected in our choices of words. And the error is compounded because we also know (or just believe) that when our interlocutor converts the words they hear into their own inner meaning it is both an imperfect process and not the same as the one we used.

We might speak of our attempt to find the words as encoding and their attempt to find the meaning as decoding. The process is what they call a lossy compression problem. The vector of my meaning is stripped of subtlety when we force it to point at a discrete word or token. And when you ingest my token and project it into your meaning space you might add and subtract subtlety that didn’t actually travel over the channel of our communication.

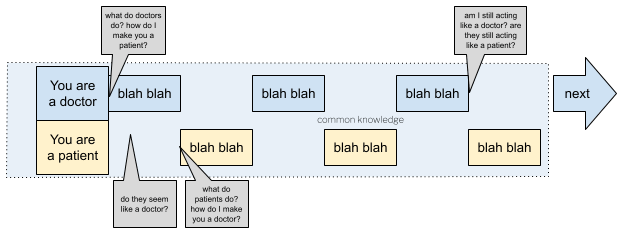

A recent paper by Shekkizhar et al. investigates a type of agent-agent interaction failure they call “echoing.” Echoing happens when over the course of an interaction agents abandon their assigned roles and converge on some sort of mirroring of one another. They offer as an example a customer agent talking to a hotel booking agent where after a number of exchanges the customer agent starts talking like the booking agent (“I can help you find a king sized room for one night” instead of “yes, please that king size room for me.”).

Their AxA scenario differs from conventional multi-agent systems (MAS) in that “AxA agents maintain private internal state, operate with distinct tools, and may have competing utilities.” In other words, these are not agents on a team with a shared plan and goals. They characterize the issue as emergent “identity inconsistency failure”

This diagram shows two agents, each with a role defined in their system prompt. The transcript of their exchanges sits “on top of” this role foundation as the context they use to generate the next thing they say. Importantly this context includes what their interaction partner says along the way. Over time, their foundational role description fades more and more into the background.

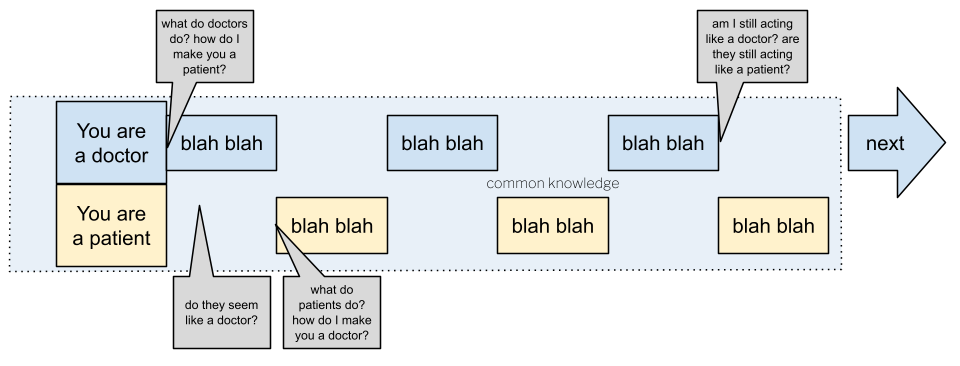

In human interactions, role plays, so to speak, a different role. Or rather it is played with differently by the participants. A shared definition of the situation and the normative expectations surrounding roles (both how I should act and how I can expect to be treated) create an ongoing reinforcement of the roles we are playing.

In the revised version of the diagram we have a continuing dialog by each agent with the definition of the situation. Left out is the interactive part of this whereby each role-playing agent is affirming that the other’s performance is reconcilable with the claims believed to be being made. Also, we extend the blue background box so that both agents know about the initial role “assignment” and we label the box “common knowledge” since the human agents both know that both know what is has been said and the ways that identity claims are being made and honored.

The situation creates a kind of pressure to “stay in character” – situation, then, is perhaps itself an alignment mechanism for human intelligences.

References

| Sarath Shekkizhar, Romain Cosentino, Adam Earle, Silvio Savarese. 2025. “ECHOING: IDENTITY FAILURES WHEN LLM AGENTS TALK TO EACH OTHER” arXiv:2511.09710 [cs.AI] |